Newer imaging technique discovers “glymphatic system”; may hold key to preventing Alzheimer’s disease

A previously unrecognized system that drains waste from the brain at a rapid clip has been discovered by neuroscientists at the University of Rochester Medical Center.

The highly organized system acts like a series of pipes that piggyback on the brain’s blood vessels, sort of a shadow plumbing system that seems to serve much the same function in the brain as the lymph system does in the rest of the body — to drain away waste products.

Waste clearance is of central importance to every organ, and there have been long-standing questions about how the brain gets rid of its waste,” said Maiken Nedergaard, M.D., D.M.Sc., senior author of the paper and co-director of the University’s Center for Translational Neuromedicine.

“This work shows that the brain is cleansing itself in a more organized way and on a much larger scale than has been realized previously.

“We’re hopeful that these findings have implications for many conditions that involve the brain, such as traumatic brain injury, Alzheimer’s disease, stroke, and Parkinson’s disease,” she added.

The glymphatic system

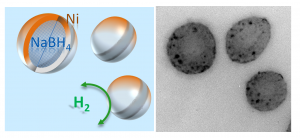

Schematic of two-photon imaging of para-arterial CSF flux into the mouse cortex. Imaging was conducted between 0 and 240 mm below the cortical surface at 1-min intervals. (Credit: Jeffrey J. Iliff et al./Science Translational Medicine)

Nedergaard’s team has dubbed the new system “the glymphatic system,” since it acts much like the lymphatic system but is managed by brain cells known as glial cells. The team made the findings in mice, whose brains are remarkably similar to the human brain.

Scientists have known that cerebrospinal fluid or CSF plays an important role cleansing brain tissue, carrying away waste products and carrying nutrients to brain tissue through a process known as diffusion. The newly discovered system circulates CSF to every corner of the brain much more efficiently, through what scientists call bulk flow or convection.

“It’s as if the brain has two garbage haulers — a slow one that we’ve known about, and a fast one that we’ve just met,” said Nedergaard. “Given the high rate of metabolism in the brain, and its exquisite sensitivity, it’s not surprising that its mechanisms to rid itself of waste are more specialized and extensive than previously realized.”

While the previously discovered system works more like a trickle, percolating CSF through brain tissue, the new system is under pressure, pushing large volumes of CSF through the brain each day to carry waste away more forcefully.

The glymphatic system is like a layer of piping that surrounds the brain’s existing blood vessels. The team found that glial cells called astrocytes use projections known as “end feet” to form a network of conduits around the outsides of arteries and veins inside the brain — similar to the way a canopy of tree branches along a well-wooded street might create a sort of channel above the roadway.

Those end feet are filled with structures known as water channels or aquaporins, which move CSF through the brain. The team found that CSF is pumped into the brain along the channels that surround arteries, then washes through brain tissue before collecting in channels around veins and draining from the brain.

How has this system eluded the notice of scientists up to now?

The scientists say the system operates only when it’s intact and operating in the living brain, making it very difficult to study for earlier scientists who could not directly visualize CSF flow in a live animal, and often had to study sections of brain tissue that had already died. To study the living, whole brain, the team used a technology known as two-photon microscopy, which allows scientists to look at the flow of blood, CSF and other substances in the brain of a living animal.

While a few scientists two or three decades ago hypothesized that CSF flow in the brain is more extensive than has been realized, they were unable to prove it because the technology to look at the system in a living animal did not exist at that time.

“It’s a hydraulic system,” said Nedergaard. “Once you open it, you break the connections, and it cannot be studied. We are lucky enough to have technology now that allows us to study the system intact, to see it in operation.”

Clearing amyloid beta more efficiently

First author Jeffrey Iliff, Ph.D.,

Left: In red, smooth muscle cells within the arterial wall. The green/yellow is CSF fluid on the outside of that artery. The blue that is visible just on the very edge of the red arterial walls, especially at the red/red arterial branch — those are the water channels, the “aquaporins” discussed in the paper and in the press release, which actually move the CSF. These are critical to this process. Right: much the same, but with the red stripped out, so the focus is on the CSF. Aquaporins still visible. (Credit: Jeffrey Iliff/University of Rochester Medical Center)

a research assistant professor in the Nedergaard lab, took an in-depth look at amyloid beta, the protein that accumulates in the brain of patients with Alzheimer’s disease. He found that more than half the amyloid removed from the brain of a mouse under normal conditions is removed via the glymphatic system.

“Understanding how the brain copes with waste is critical. In every organ, waste clearance is as basic an issue as how nutrients are delivered. In the brain, it’s an especially interesting subject, because in essentially all neurodegenerative diseases, including Alzheimer’s disease, protein waste accumulates and eventually suffocates and kills the neuronal network of the brain,” said Iliff.

“If the glymphatic system fails to cleanse the brain as it is meant to, either as a consequence of normal aging, or in response to brain injury, waste may begin to accumulate in the brain. This may be what is happening with amyloid deposits in Alzheimer’s disease,” said Iliff. “Perhaps increasing the activity of the glymphatic system might help prevent amyloid deposition from building up or could offer a new way to clean out buildups of the material in established Alzheimer’s disease,” he added.

The work was funded by the National Institutes of Health (grant numbers R01NS078304 and R01NS078167), the U.S. Department of Defense, and the Harold and Leila Y. Mathers Charitable Foundation.

REFERENCES:

sending...

sending...